What happens if we peek at experiments?

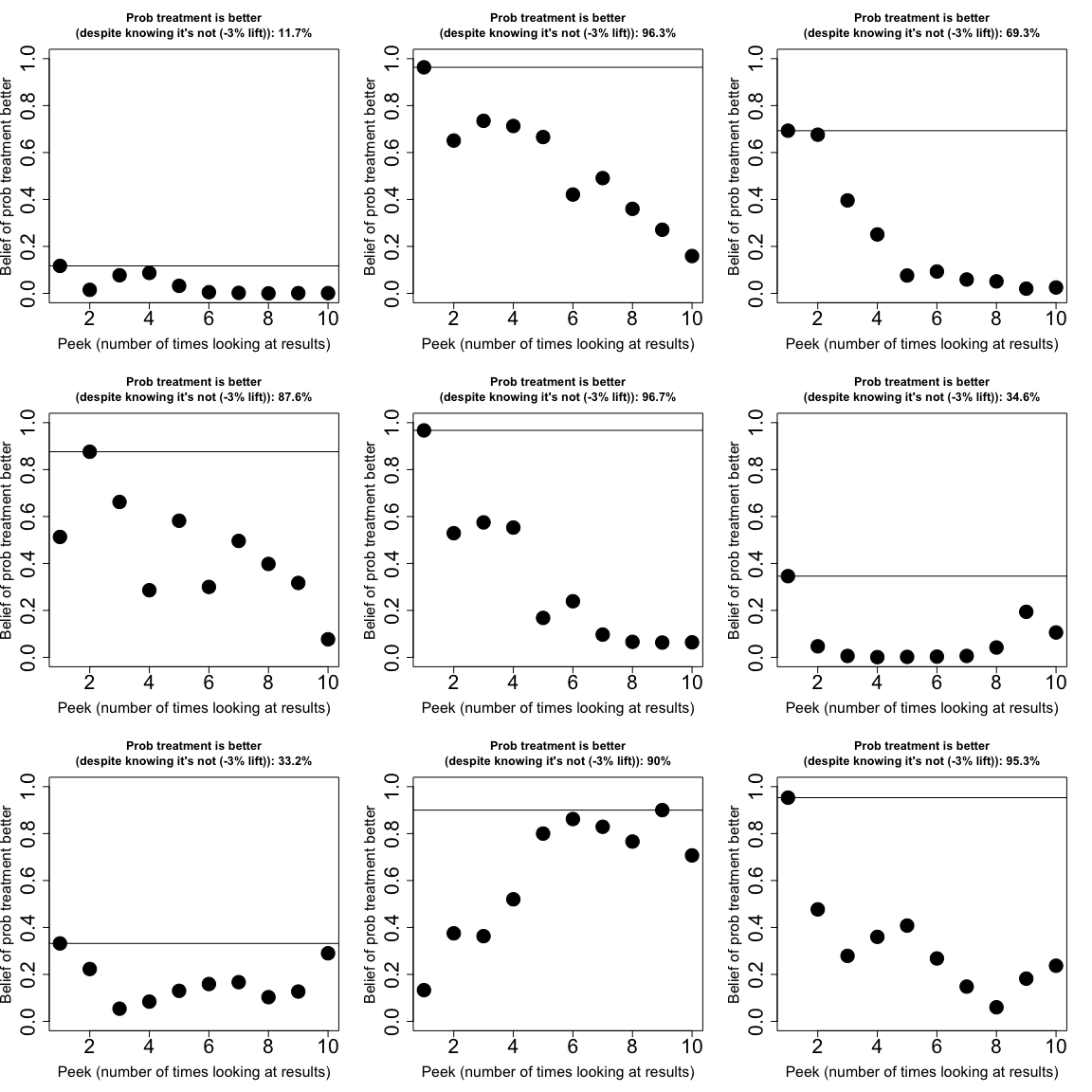

Below are nine seperate experiments. Each experiment is planned to sample 2,500 entrants of two groups. We’ll assume the control group in each exhibits a conversion rate of 3% lift over the treatment (the treatment has a lower conversion rate). Suppose we peek at each experiment ten times. We want to avoid choosing the treatment since it’s worse.

How many times do we declare the treatment the winner and end the experiment?

library(experimentr)

par(mfrow = c(3, 3))

seq_len(9) %>%

purrr::map(function(i) {

experimentr::perform_experiment(

entrants = 5e3, # total in experiment (need to change to per group)

a_conv = 0.5,

b_conv = 0.5*1.03,

# within a sample (to 250 means 500 total collected)

entrants_to_peek_after = 250

) -> experiment

plot(experiment ~ seq_len(length(experiment)), ylim = c(0, 1),

pch = 19, cex = 3, cex.lab = 1.5, cex.axis = 2, cex.main = 1.5,

xlab = "Peek (number of times looking at results)",

ylab = "Belief of prob treatment better");

abline(h = max(experiment), v = which(. == max(experiment)));

title(main = glue::glue("Prob treatment is better\n(despite knowing it's not (-3% lift)): { scales::percent(max(experiment)) }"))

})

I’m not a fan of telling folks ‘no’ if a solution can be found. By limiting the number of times we peek, we can also limit the potential for error in decision-making. If we learn that experiments can betray us, we can employ techniques to reduce the risk to a tolerable level. Any tolerable level can be achieved but costs scale with safety (usually as more data, time). The important aspect isn’t preventing peeking but enabling folks to peek in a safe manner. Happy peeking at experiments!